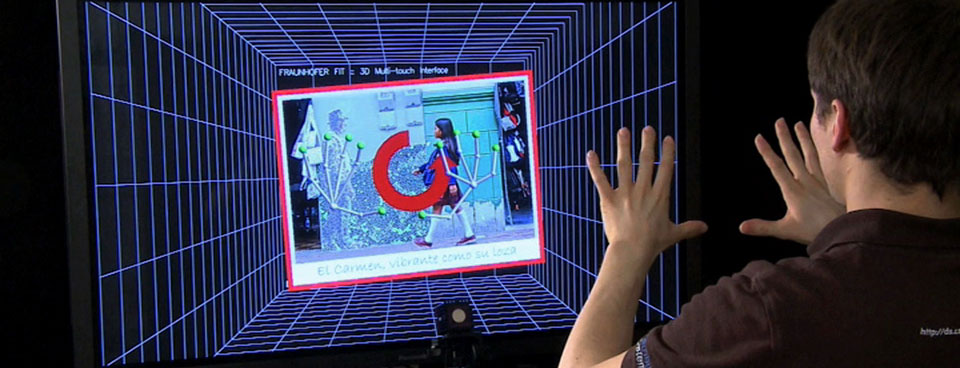

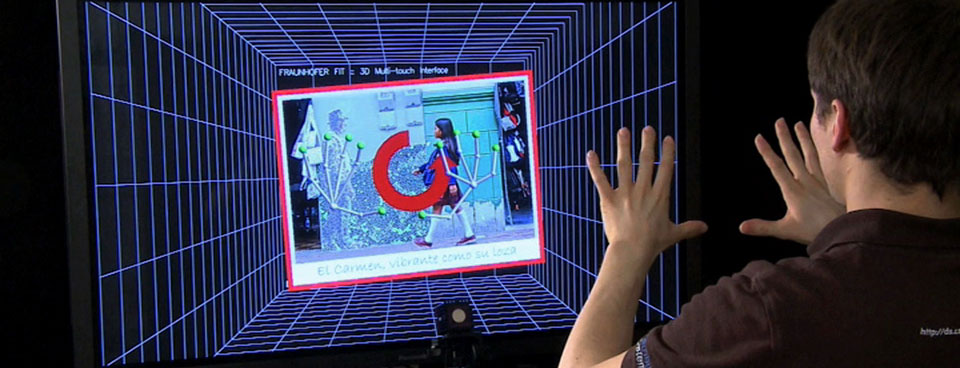

3-D Gesture-Based Interaction System

Touch screens such as those found on the iPhone or iPad are the latest form of technology allowing interaction with smart phones, computers and other devices. However, scientists at Fraunhofer FIT have developed the next generation noncontact gesture and finger recognition system. The system detects hand and finger positions in real-time and translates these into appropriate interaction commands. Furthermore, the system does not require special gloves or markers and is capable of supporting multiple users.

With touch screens becoming increasingly popular, classic interaction techniques such as a mouse and keyboard are becoming less frequently used. One example of a breakthrough is the Apple iPhone which was released in summer 2007. Since then many other devices featuring touch screens and similar characteristics have been successfully launched – with more advanced devices even supporting multiple users simultaneously, e.g. the Microsoft Surface table becoming available. This is an entire surface which can be used for input. However, this form of interaction is specifically designed for two-dimensional surfaces.

Fraunhofer FIT has developed the next generation of multi-touch environment, one that requires no physical contact and is entirely gesture-based. This system detects multiple fingers and hands at the same time and allows the user to interact with objects on a display. The users move their hands and fingers in the air and the system automatically recognizes and interprets the gestures accordingly. The system tracks the user's hand in front of a 3-D camera. The 3-D camera uses the time of flight principle, in this approach each pixel is tracked and the length of time it takes light to be filmed travelling to and from the tracked object is determined. This allows for the calculation of the distance between the camera and the tracked object.

A special image analysis algorithm was developed which filters out the positions of the hands and fingers. This is achieved in real-time through the use of intelligent filtering of the incoming data. The raw data can be viewed as a kind of 3-D mountain landscape, with the peak regions representing the hands or fingers. In addition plausibility criteria are used, these are based around: the size of a hand, finger length and the potential coordinates.

The high resolution, in particular the recognition of fingers and finger tips, is a clear advantage over Microsoft's current Kinect system that can recognize arms and hands. Our system allows, for the first time, fine motor manipulations in the virtual world, without contact or specific instruments. Computer games and the presentation of information, e.g. at exhibitions, are obvious fields of application. Our technology also holds great potential for a variety of other application domains, e.g. the visual exploration of complex simulation data or novel forms of learning. You might even imagine to control machines and household appliances with 3D gestures.